Posted on

April 12, 2024

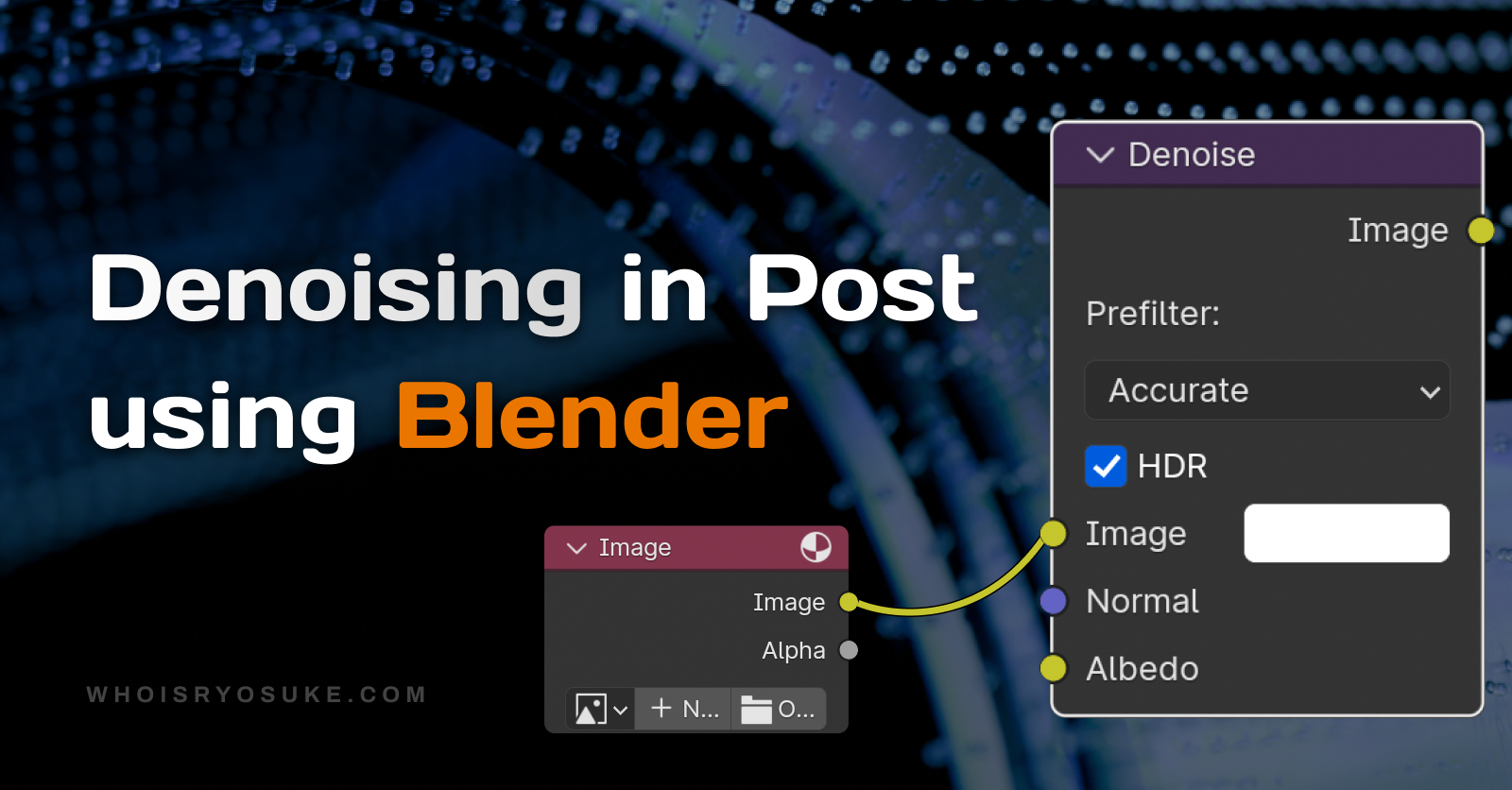

Recently I’ve been rendering a lot of projects in Blender, from large 4k still images to 1080p videos. While rendering out all these projects, I kept having renders fail during the denoising process. After complaining about it online I had a few people recommend that they denoise in post production.

I explored the process of denoising Blender renders in post production using the compositor, and outweigh the pros and cons of the new and extended workflow. If you’re just interested in the results, scroll down to see a comparison of render times.

How to Denoise in Post

There are a couple of ways of denoising in post production. The one we’ll be focused on today primarily is using Blender’s built-in Compositor. But you could also use 3rd party software to accomplish a similar goal, albeit at the cost of said software.

Compositor

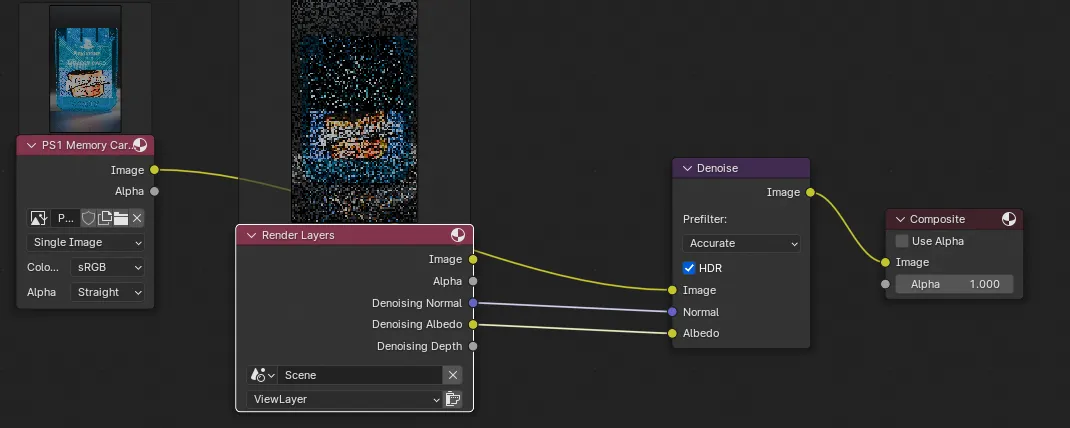

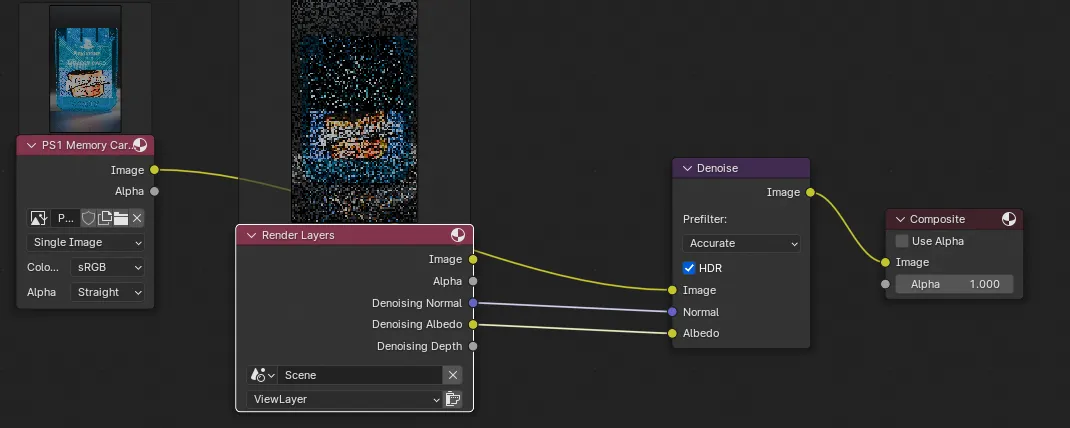

If you’re not familiar, Blender has a tab called “Compositor”. This features the Compositor “panel”, which is essentially a node graph for post processing and compositing one or more images.

You can use it to change photographic settings of a scene, like the exposure levels or hue, saturation, and contrast. Or you could combine images, or use data from the scene like UVs or ambient occlusion data to power the composition.

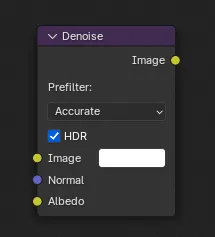

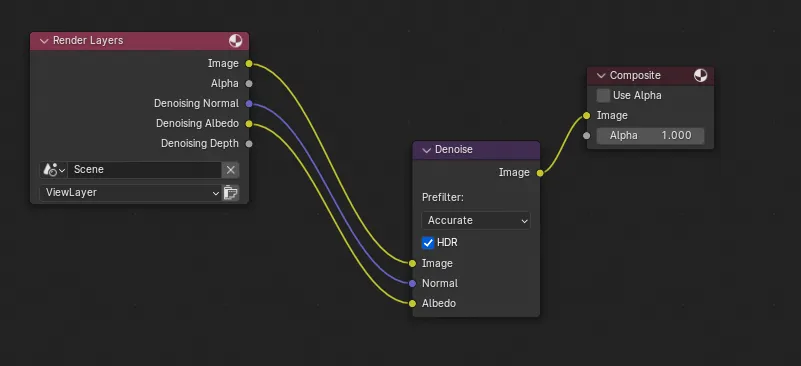

In our case, we want to use the Denoise node. This node is the same as checking off “Denoise” under the render settings. By making it a separate node, we can change the workflow up and render out a noisy image then composite it into a denoised version later.

Here’s how that workflow looks:

Render image with noise

- Render image with noise (aka “Denoise” unchecked)

- Save the image somewhere

Compositing

-

Make a new Blender file (optional) — append it with a “_compositing” filename to distinguish it.

-

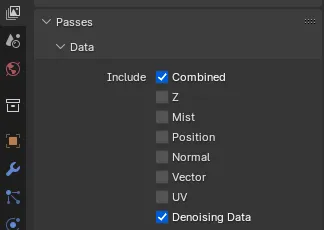

Go to View Layer tab. Under the “Include” section check the Denoising Data.

-

Open the Compositor (either tab on top - or change one of your panels to it)

-

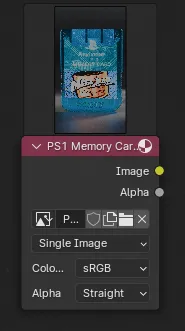

Drag and drop the image into the compositor. It’ll appear as an node with an

ImageandAlphaoutput.

-

Add a Denoise node

-

Connect the image to Image on Denoise

-

Connect the denoise albedo and normal data to the Denoise node

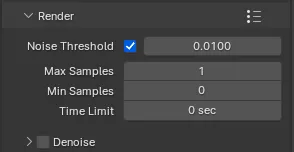

-

Go to the Render Properties tab and turn Render samples down to 1. This makes it much faster since ideally we just need the denoise data.

-

Render the scene - it should be much faster than the original render.

-

Your image should be denoised now

Working with animations

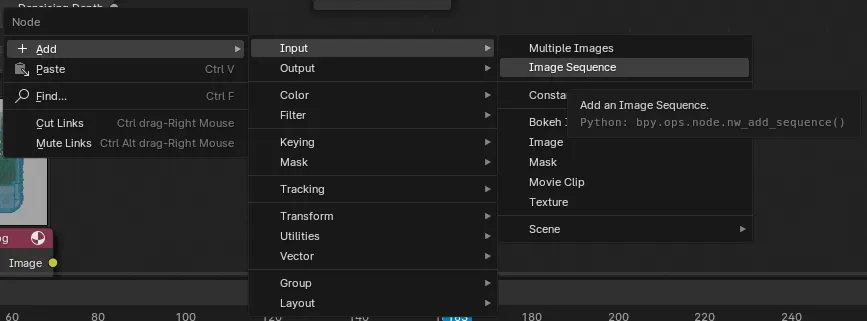

You can also import image sequences into the Blender compositor, so it can use all the frames (e.g. 0-100) and render it into a single animation. Make sure to change your export format to a movie file like .mp4.

To add an image sequence, right click anywhere in the Compositor node graph and go to Add > Input > Image Sequence.

Afterthoughts

This process definitely works, and helps a lot if your renders or animations are failing consistently on the denoise part. It ensures you can render faster and less intensively (since denoising is often the most CPU or GPU intensive task — particularly at scale like 4k+).

But as you can see, it definitely takes a bit of setup every time. And if you’re using a workflow that has a queue system, like Flamenco, you basically have to do everything twice.

I also noticed a difference in image quality when I denoised in post. My images looked more washed out (see comparison below).

It’d definitely be interesting to see if there was a Blender plugin or solution that could automate this process. Ideally I want to be able to hit a render button and have the noisy image queued up, then the denoise to also be queued up without setup. It’s hard to ask though, since the compositor step requires a reference to an image file or sequence.

Post Processing in other software

You could also denoise in 3rd party software like Blackmagicstudio’s Fusion. You can find some good success with this route cause go figure - you’re using industry grade (and priced) software.

I honestly didn’t dive to deep into this because if I’m using Blender, I’m probably not paying for high end software — and if I am, it’s a whole different bespoke pipeline (like more VFX-based for Fusion) that doesn’t apply to most Blender users.

Comparison of render times

To test this I used my recent PS1 memory card project and rendered out a 1080 by 1920 image - once with denoise enabled, then again using the entire compositor process.

For reference, here are some of my PC specs:

- Intel Core i9 13900K

- NVIDIA GeForce RTX 4080

- 2x 16GB DDR5 RAM

Here were my settings for my render:

- Cycles

- 4096 samples

- Tiling enabled - 2048

- 1080 x 1920

Here were the results:

Control:

- Render with denoise (baseline):

01:46.98-1011.14Mmemory

Compositor:

- Render without denoise:

01:45.44-978.5Mmemory - Render with denoise compositor (1 sample):

00:02.20-964.5Mmemory

- Compositor denoise combined time:

01:47:04- Technically takes longer by a second or so

- Render with denoise compositor (4096 samples - directly - not from image):

01:47:03- Basically the same time as manually combining them myself. The only issue here is if denoising failed - I’d have no image to work from after all that render time (since render + denoise is combined, no noisy image is saved before denoise — meaning crash is full loss).

Seems that render time isn’t greatly effected or improved by this process. Even when I’m rendering 4k, denoising is intensive — but only takes about 2-3 seconds.

Should you denoise in post?

I’d probably only recommend this method if you’re rendering anything where denoising is crashing Blender.

For example, on larger images like 4K and above, denoising can sometimes fail even on my high end machine. So it’d benefit me to render out a noisy image first — just so I don’t have to waste compute time on re-rendering it again just to denoise it.

But for most cases, I usually just combine the denoising to save myself the time. For example, recently I had to render out some PS1 memory card videos for social. There are 5 different colors, and each one had multiple camera views to render.

I ended up rendering out over 20+ videos. Rather than waste time setting up 20+ compositor files to denoise, I just denoised immediately. I had maybe 1 or 2 renders fail on a frame or two? But thanks to Flamenco (the Blender open source render farm), I just had to requeue them and they rendered the next time around 🤘

Refs

- Ryosuke (@whoisryosuke) on Threads

- Ryosuke (@whoisryosuke) on Threads

- How to DENOISE A RENDER in Blender 2.9 | Blender Rendering Tutorial

- Tutorial - Denoise AFTER You’ve Rendered Your Scene

- Remove noise from your footage in After Effects without any plugins!!

- How To Remove Noise From Video in After Effects - No Plugins

- How to Setup Compositor Denoising for Blender 3.0 [Read Desc]

- Fusion 18 | Blackmagic Design